Jinhua Zhang,

Wei Long,

Minghao Han,

Weiyi You,

Shuhang Gu*

University of Electronic Science and Technology of China

*Corresponding Author

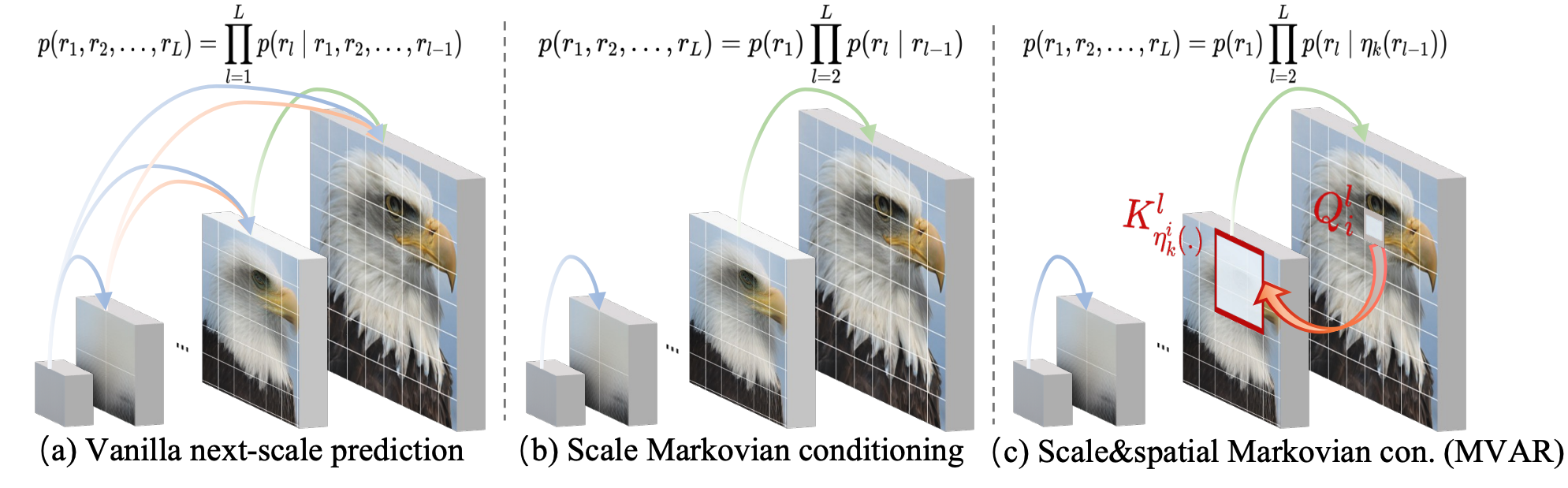

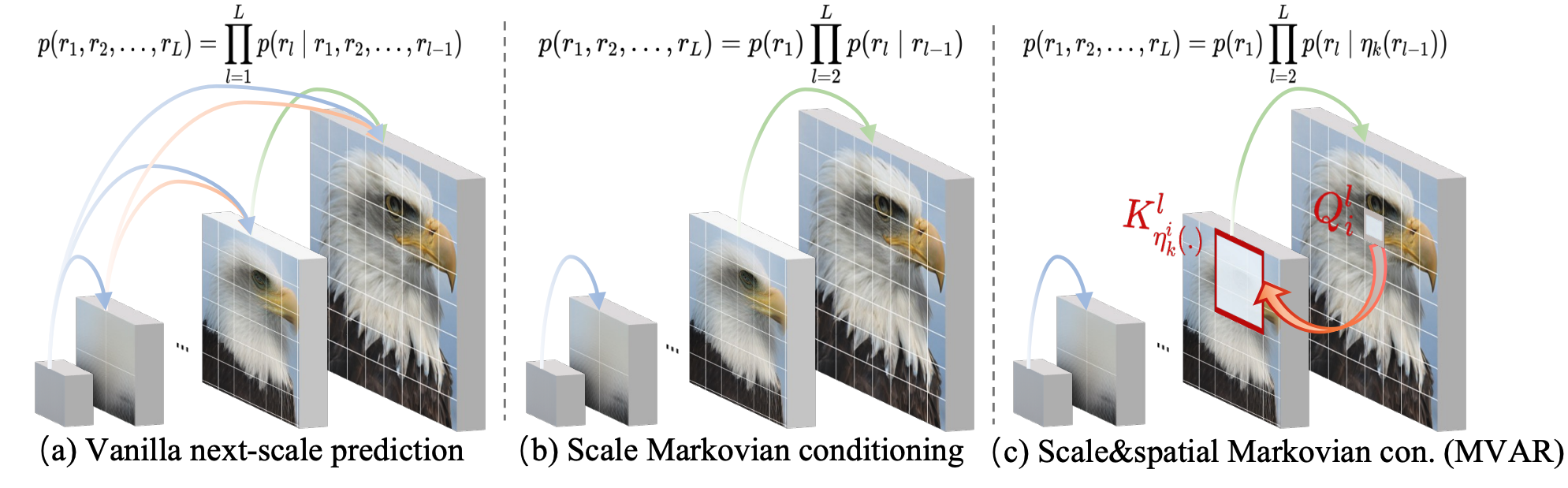

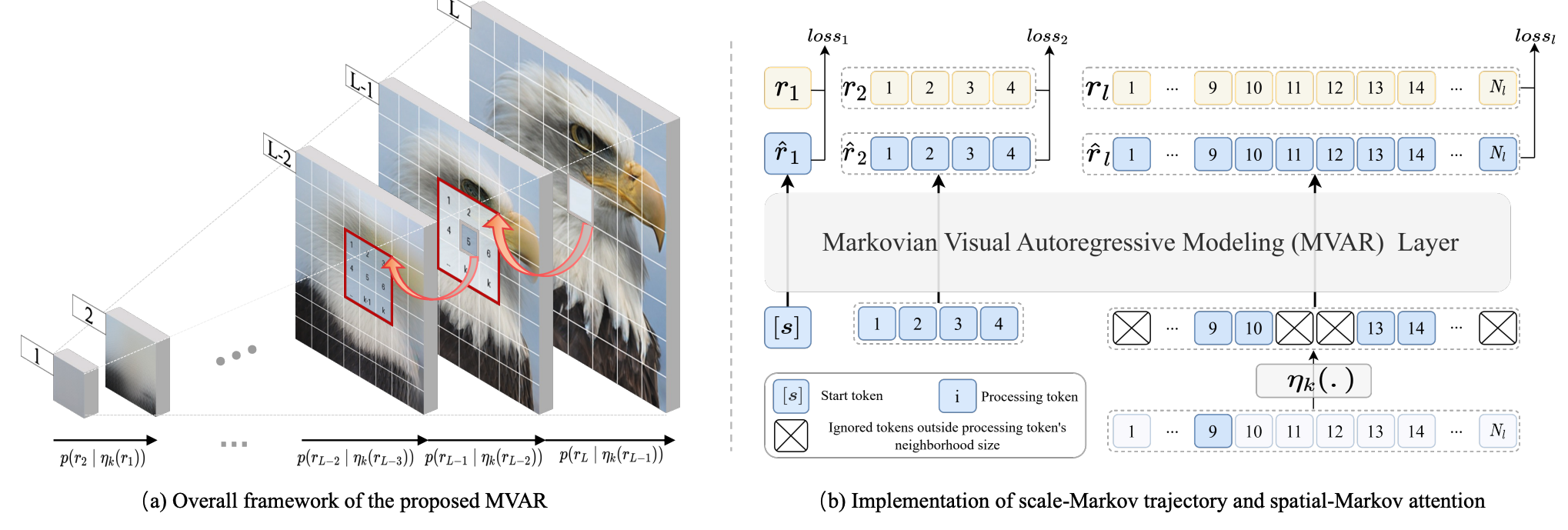

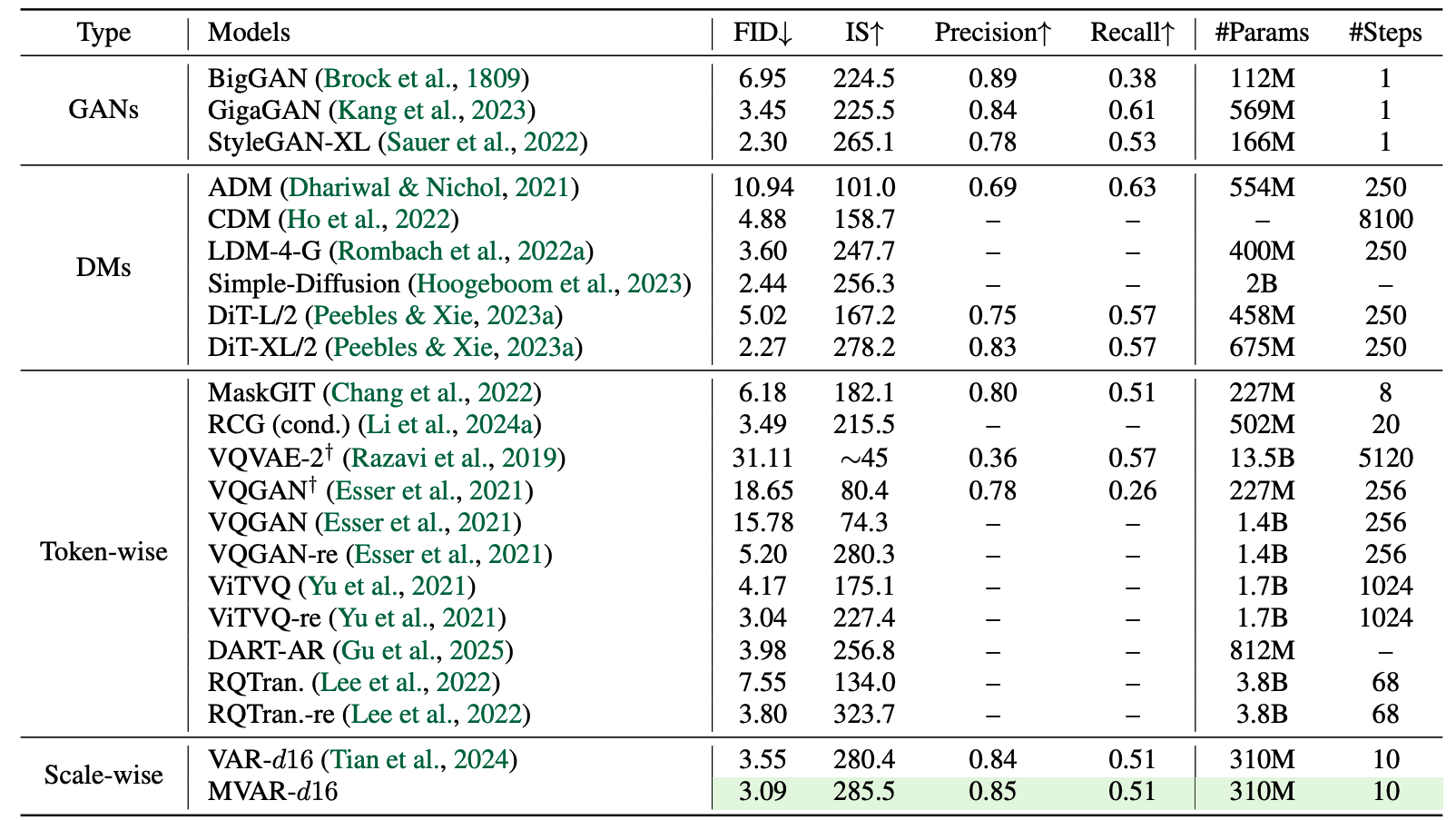

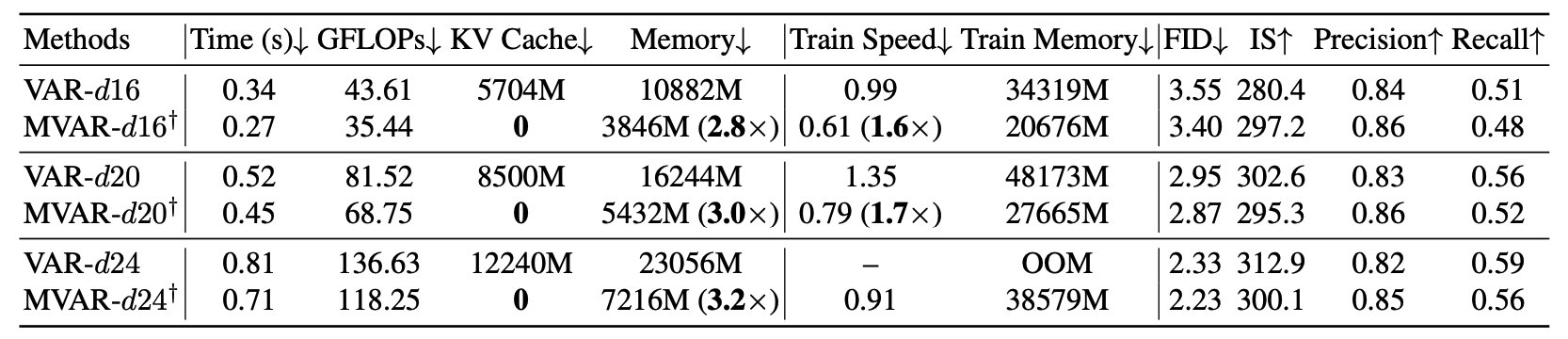

🔥 3.0× Memory Efficiency & KV-Cache Free Inference. We propose Markovian Visual AutoRegressive modeling (MVAR), which introduces scale and spatial Markov assumptions to mitigate redundancy in visual generation. By reducing complexity from O(N^2) to O(N×k), MVAR enables parallel training on just eight RTX 4090s and achieves state-of-the-art performance on ImageNet.

Conventional visual autoregressive methods exhibit scale and spatial redundancy by conditioning each scale on all previous scales and requiring each token to attend to all preceding ones. We propose MVAR, a novel framework that introduces:

[1] Tian K, Jiang Y, Yuan Z, et al. Visual autoregressive modeling: Scalable image generation via next-scale

prediction[J]. Advances in neural information processing systems, 2024, 37: 84839-84865.

[2] Hassani A, Walton S, Li J, et al. Neighborhood attention transformer[C]//Proceedings of the IEEE/CVF

conference on computer vision and pattern recognition. 2023: 6185-6194.